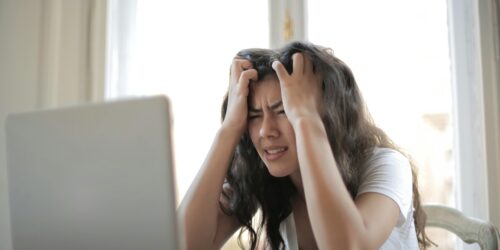

cPanel Dovecot 2.4 upgrade breaking Windows 7 clients

cPanel recently rolled out an upgrade from Dovecot 2.3 to 2.4 which has accidentally broken old IMAP and POP3 clients, such as those running on Windows 7 as it removes some key configuration needed to support certain SSL/TLS ciphers. For example, a Microsoft Outlook client running on Windows 7 would report: Receiving reported error (0x800CCC1A) Your server does not support...